Balkind: Moving Huge Data Sets

Could a cart on tracks be used to speed drives across a data center? CS Ass’t. Prof. Jonathan Balkind and researchers investigate.

From the COE News article – "A Novel Approach to Moving Huge Data Sets for Machine Learning"

Data used to move along copper wires. Increasingly in recent years, it has been traveling much faster and more efficiently on light waves via lasers and fiber optic cables, with some short copper connections still in use on data-center racks. That set-up works fine for users who are doing everyday tasks like running a Google search, editing video, or completing an inventory report.

It is, however, far less effective for moving a different kind of data package, one that is many orders of magnitude larger. Even with the most up-to-date technology, transferring massive data sets on the petabit (PB) scale (a petabit being one quadrillion bits) — like those used in data analytics, genomics, experimental physics, or in training large language models such as ChatGPT — create bottlenecks at even the most advanced data center.

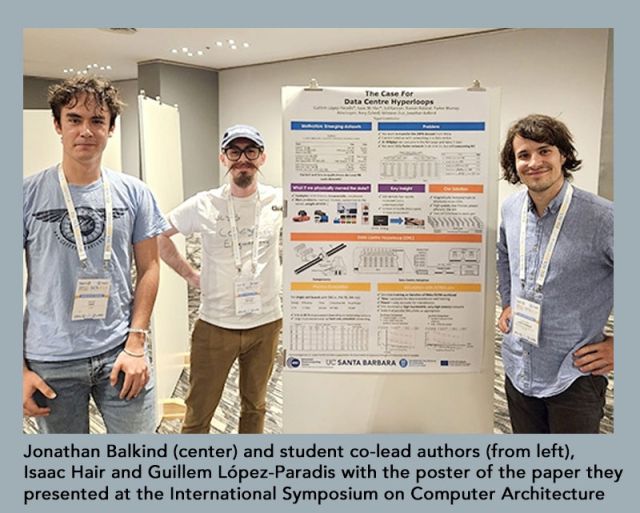

Two students and their advisor, UC Santa Barbara computer science assistant professor Jonathan Balkind, an expert in computer architecture, presented a paper on the topic of data transfer at the 2024 International Symposium on Computer Architecture (ISCA), held June 29-July 3 in Buenos Aires, Argentina. In the paper, titled "The Case for Data Center Hyperloops," Balkind and co-first authors, Guillem López-Paradís, of the Barcelona Supercomputing Center, and UCSB College of Creative Studies undergraduate student Isaac Hair, who collaborated on the paper with multiple co-authors including PhD student Parker Murray, master’s student Rory Zahedi, and undergraduates Sid Kannan, Roman Rabbat, Alex Lopes, and Winston Zuo, explained that moving Meta’s 29-PB machine-learning dataset from node A to node B using 400Gb/s networking would take roughly an entire week. To achieve an optimized one-hour transfer time would require the network to be 161 times faster than it is now, reaching a bandwidth of 64 Tbit/s, which exceeds the capability of today’s top-of-rack switches.

The Balkind team argues for changing data-center architecture to enable “embodied data movement” — that is, physically carrying the data storage media across the data center. Their idea is to build “data-center hyperloops” (DHLs) to physically transport solid-state disks (SSDs) containing large datasets. Hyperloops consist of a pair of rails that use magnetic levitation to support and transfer a payload inside a low-atmosphere chamber from one endpoint to another. The team’s proposal applies that paradigm at the data-center scale to shuttle SSDs between compute nodes and cold storage. Using a DHL system, the team writes, “A data center can save energy while also raising performance, thanks to bulk network bandwidth being freed for other applications.” DHL, they write, “obtains energy reductions up to 376 times above 400Gb/s optical fiber.”

It might seem counterintuitive to imagine that such immense data hauls could move faster if delivered manually — on a disk carried by a vehicle operating in a near- frictionless vacuum tunnel. Balkind is quite at ease — in that counterintuitive realm. Asked a week prior to the conference what kind of reception he was expecting, he said, only half-joking, “I’m hoping for laughs.”

The reception was good, he reported upon returning, but he might have earned a few laughs, because his idea is so far out of the mainstream thinking as it relates to the increasing challenge of moving massive data parcels faster at reduced cost. The proposal, however, which he developed with his co-authors, is hardly a joke. It is conceptual and theoretical at this time, and it raises many as-yet unanswered questions, but, Balkind says, “The raw numbers from our model show that you can move a disk really fast.” Will it actually work? Time will tell, but, he notes, “If the numbers are good, it’s probably worth looking at, right?”

The idea has its roots in a paraphrased quote presented in Andrew Tanenbaum’s well-known textbook on computer networking, which Balkind recalls as, “Never underestimate the bandwidth of a station wagon filled with hard disks hurtling down the highway.” Swap out the automobile for a very small “cart” measuring perhaps a few inches per side, and a hyperloop for the pneumatic tubes that used to be common in factories and at drive-through bank windows, and you have an updated analog of the data-packed station wagon hurtling down the highway.

“We're not using copper or light to move data,” Balkind says. “We're saying instead, ‘Here's a train and a container, and we’re going to load it with disks, and then we just fire it across the data center as fast as possible. In terms of the pure mechanical work of moving these disks from A to B, maybe it could be faster and cheaper than whatever we're doing today.”

Traditionally, the disks to be moved would have been hard disks, “But those are pretty heavy,” Balkind notes. In his proposed system, he would use SSDs, the densest of which can store around eight terabytes (TB) at a mass of six to eight grams. It’s a technology, he says, “that we haven’t really taken advantage of.”

The concept of physically moving disks has an analog in current everyday practice. Right now, Balkind explains, if you want to get a lot of data into a data center such as Amazon Web Services, “Amazon will let you pay to have a truck come to your door. They connect to your server via a cable, you write your data into this truck full of disks, and they deliver your data to the data center. It’s a much better choice than having to use all of the available network bandwidth you have for the next several weeks to get your data over there.

That process, however, “is still not very fast,” Balkind says. “The hard drives on the truck spin, so downloading the data takes time. Not only are SSDs data-dense, but you can get data into and out of them much quicker than you can from traditional hard disks.” Not to mention the time lost in transit as a truck motors down a highway.

At a data center, servers are stacked vertically in racks, and the racks abut each other to form long horizontal rows with aisles between them. Some of these long rows house machine-learning supercomputers, the enabling technology for today's generative-AI boom. Balkind imagines the hyperloop beneath a subfloor running perpendicular to the racks. “We're not moving any part of the server; we're only moving the storage with the data that you need on it,” he explains. “If you want to put data on, you key in a request for the cart. It shows up under your server, and you transfer your data into or out of it. It can be moved around as necessary.

“It seems that it could be a win,” Balkind continues. “We've only done the early-stage modeling with some simulation elements, and we're not mechanical engineers, so we didn't build anything. And while various assumptions are baked in, we argue that many potential issues can be resolved with careful and creative engineering.”

It is a question of using the system to move only what it makes sense to move that way. “We want to move only things that we have to move, such as very large datasets for machine learning,” Balkind says. “Otherwise, we're burning energy for no good reason. And in terms of speed, we’re up against physics, because as we move the discs faster, we spend quadratically more energy. So we chose the densest storage we could get, a type of SSD known as m.2. They're about three inches long by an inch wide and weigh about a quarter of an ounce. Basically, you just pack a bunch of them together into the cart, and then move the cart. The carts will be in a library some of the time, or they’ll be plugged into a server rack at the bottom or in transit to their next data pickup or delivery destination.”

As with Tannenbaum’s “station wagon filled with hard disks,” perhaps the power of the Balkind team’s somewhat counterintuitive, but theoretically effective, approach to moving data should not be underestimated.

COE News – "A Novel Approach to Moving Huge Data Sets for Machine Learning"